Networking Docker Containers

In this blog post, we will learn Docker networking concepts. Such as overlay networks, Docker swarm, embedded DNS servers, routing mesh etc.

Three types of Docker networks..

Let us first understand the three types of networks available in docker: “bridge”, “none” and “host”.

3 Types of Networks

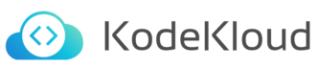

A bridge is the default network a container gets attached to. If you would like to associate the container with any other network, you should specify the network information using the network command line parameter like this:

<strong>docker run --network=<network> ubuntu</strong>usage: docker run [Options] IMAGE [COMMAND] [ARG…]The bridge network is a private, internal network created by docker on the host. All containers attach to this network by default and they get an internal IP address. Usually in the range 170.17.x.x .

Containers can access each other using this internal IP if required. To access any of these containers from outside-world, the docker bridge architecture maps ports of these containers to port on the docker host.

Bridge network

Another type of network is the host network. This takes out any network isolation between the docker host and the docker containers.

For example, if you were to run a web server on port 5000 in a web-app container attached to the host network, it is automatically accessible on the same port externally, without requiring to publish the port using the -p option.

<strong>docker run --network=host ubuntu</strong>usage: docker run --network=[bridge | none | host] [IMAGE]As a web container using the host’s network, it would mean that unlike before, you will now NOT be able to run multiple web containers on the same host and port, as these are now common to all containers in the host network.

Host Network

The third is the none network. The containers are not attached to any network and does not have access to the external network or other containers. It is isolated from all other networks.

None Network

<strong>docker run --network=none ubuntu</strong>Bridge Network in a Multi-Node Cluster

Let’s take a look at the bridge network in a bit more detail.

For example, say we have multiple docker hosts running containers. Each docker host has its own internal private network in the 172.17.x.x series allowing the containers running on each host to communicate with each other. However containers across the host have no way of communication with each other unless you publish the ports on those containers, and set up some kind of routing yourself.

Manual routing setup required for cross-host container communication

Overlay Network in Docker Swarm

This is where an overlay network comes into play with Docker Swarm.

You can create a new network of type overlay, which will create an internal private network that spans all the nodes participating in the swarm cluster.

docker network create driver -d overlay subnet --10.0.9.0/24 my-overlay-networkusage: <code>docker network create [OPTIONS] NETWORK</code>We could then attach the containers or services to this network using the--network option while creating a service and so we can get them to communicate with each other through the overlay network.

docker service create --replicas --network my-overlay-network nginxusage: <code>docker service create [OPTIONS] IMAGE [COMMAND] [ARG...]</code>

An overlay network spanning three hosts

How do multiple containers publish on the same port?

Previously, we learned about port publishing or port mapping. Assume that we have a web service running on port 5000. For an external user to access the web service, we must map the port on the docker host, in this case map port 5000 on the container to port 80 on the docker host.

Once we do that, a user will be able to access the web server using the URL with port 80. This functions when running a single container and is easy to understand, but not when we are working with a swarm cluster like the one shown .

For example, think of this host as a single node swarm cluster. Say we were to create a web-server service with two replicas and a port mapping of port 80 to 5000. Since this is a single-node cluster, both the instances are deployed on the same node. This will result in two web service containers both trying to map their 5000 ports to the common port 80 on the docker host, but we cannot have two mappings to the same port.

Mapping of replica ports to the same host port denied

Ingress Network

This is where ingress networking comes into picture. When you create a docker swarm cluster, it automatically creates an ingress network. The ingress network has a built-in load balancer that redirects traffic from the published port, which in this case is the port 80. All the mapped ports are the port 5000 on each container. Since the ingress network is created automatically there is no configuration that you have to do.

Ingress Network

You simply have to create the service you need by running the service create command and publish the ports you would like to publish using the -p parameter. Just like before. the ingress network and the internal load balancing will simply work out of the box, but it is important for us to know how it really works.

docker service create — replicas=2 -p 80:5000 my-web-serverusage<strong>: </strong>docker service create [Options] IMAGE [COMMAND] [ARG…]Let us now look at how it works when there are multiple nodes in the docker swarm cluster. In this case, we have a three-node docker swarm cluster running two instances of the web-server. Since we only requested for two replicas the third docker host is free and has no instances, Let us first keep ingress networking out of our discussion and see how this arrangement works without it.

Without the ingress networking, how do we expect the user to access our services in a swarm cluster of multiple nodes?

Third host finds services of the cluster inaccessible

Since this is a cluster, we expect the users to be able to access services from any node in our cluster, meaning any user should be able to access the web server using the IP address of any of these containers, since they are all part of the same cluster.

Without ingress cluster networking, a user could access the web server on nodes 1 and 2 but not on node 3 because there is no web service instance running on node 3.

Let’ us now bring back ingress networking.

Ingress networking is in fact a type of overlay network that spans across all the nodes in the cluster. The way this load-balancer works is it receives requests from any node in the cluster and forwards that to the respective instance on any other node essentially creating a routing mesh.

The routing mesh , created by an ingress network

The routing mesh helps in routing the user traffic that is received on a node that isn’t even running an instance of the web service to other nodes where the instances are actually running. Again all of this is the default behavior of docker swarm and you don’t need to do any additional configurations. Simply create your service specify the number of replicas and publish the port. Docker swarm will ensure that the instances are distributed equally across the cluster. The ports are published on all the nodes and the users can access the services using the IP of any of the nodes. When they do, traffic is routed to the right services internally.

How do containers discover each other?

We have been talking about containers communicating with each other. Now how exactly does that work?

For example in this case I have a web service and a MySQL database service running on the same node or worker.

How can I get user or service to access the database on the database container? You could use the internal IP address of the MySQL container which in this case is 172.12.0.3.

mysql.connect(172.17.0.3)But, that is not really ideal because it is not guaranteed that the container will get the same IP when the system reboots. The right way to do it is to use the container name. All containers in the docker host can resolve each other with the name of the container. Docker has a built-in DNS server that helps the containers to resolve each other using the container name. Note that the built-in DNS server always runs at address 127.0.0.11.

Built-in DNS

Responses