Image source: Platform9

With Docker, you can run a single instance of the application with a simple Docker run command. In this case, to run a Node JS based application, you run the docker run nodejs command. But that’s just one instance of your application on one Docker host. What happens when the number of users increase, and that instance is no longer able to handle the load, you deploy an additional instance of your application by running the Docker run command multiple times. So that’s something you have to do yourself, you have to keep a close watch on the load and performance of your application and deploy additional instances yourself. And not just that, you have to keep a close watch on the health of these applications.

Container Orchestration

And if a container was to fail, you should be able to detect that and run the Docker run command again to deploy another instance of that application. What about the health of the Docker host itself? What if the host crashes and is inaccessible?

The containers hosted on that host become inaccessible too. So what do you do in order to solve these issues, you will need a dedicated engineer who can sit and monitor the state performance and health of the containers and take necessary actions to remediate the situation. But when you have large applications deployed with 10s of thousands of containers, that’s, that’s not a practical approach. So you can build your own scripts and that will help you tackle these issues to some extent. container orchestration is just a solution for that. It is a solution that consists of a set of tools and scripts that can help host containers in your production environment.

Typically, a container orchestration solution consists of multiple Docker hosts that can host containers. That way even if one fails, the application is still accessible through the others. The container orchestration solution easily allows you to deploy hundreds or thousands of instances of your application with a single command.

Some orchestration solutions can help you automatically scale up the number of instances when users increase and scale down the number of instances when the demand decreases. Some solutions can even help you in automatically adding additional hosts to support the user load. And, not just clustering and scaling, the container orchestration solutions also provide support for advanced networking between these containers across different hosts. As well as load balancing user requests across different hosts. They also provide support for sharing storage between the host, as well as support for configuration management and security within the cluster.

Orchestration solutions

There are multiple container orchestration solutions available today – Docker has Docker swarm, Kubernetes from Google and Mesos from Apache. Well, Docker swarm is really easy to set up and get started. It lacks some of the advanced auto-scaling features required for complex production-grade applications. Mesos on the other hand is quite difficult to set up and get started but supports many advanced features.

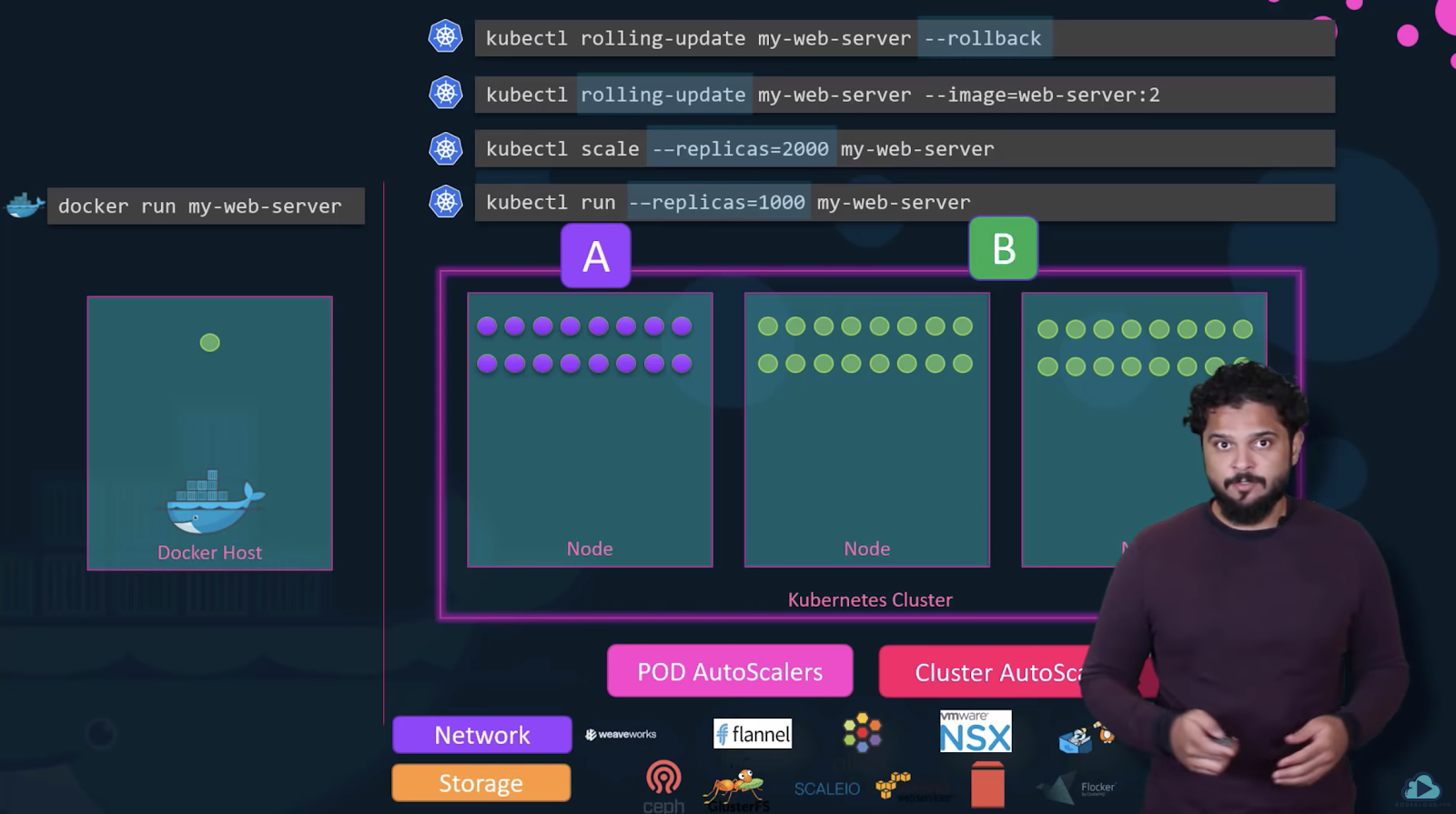

Kubernetes, arguably the most popular of all is a bit difficult to set up and get started but provides a lot of options to customize deployments and has support for many different vendors. Kubernetes is now supported on all public cloud service providers like GCP, Azure, and AWS, and the Kubernetes project is one of the top-ranked projects on GitHub. With Docker, you were able to run a single instance of an application using the Docker CLI by running the Docker run command, which is great, running and application has never been so easy before. With Kubernetes. Using the Kubernetes CLI, known as kubectl, you can run a 1000 instances of the same application with a single command.

Kubernetes can scale it up to 2000 with another command, Kubernetes can be even configured to do this automatically so that instances and the infrastructure itself can scale up and down based on user load. Kubernetes can upgrade these 2000 instances of the application in a rolling upgrade fashion, one at a time with a single command. If something goes wrong, it can help you roll back these images with a single command. Kubernetes can help you test new features of your application by only upgrading a percentage of these instances through AB testing methods.

The Kubernetes open architecture provides support for many different network and storage vendors. Any network or storage brand that you can think of has a plugin for Kubernetes. Kubernetes supports a variety of authentication and authorization mechanisms. All major cloud service providers have native support for Kubernetes.

The relation between Docker and Kubernetes

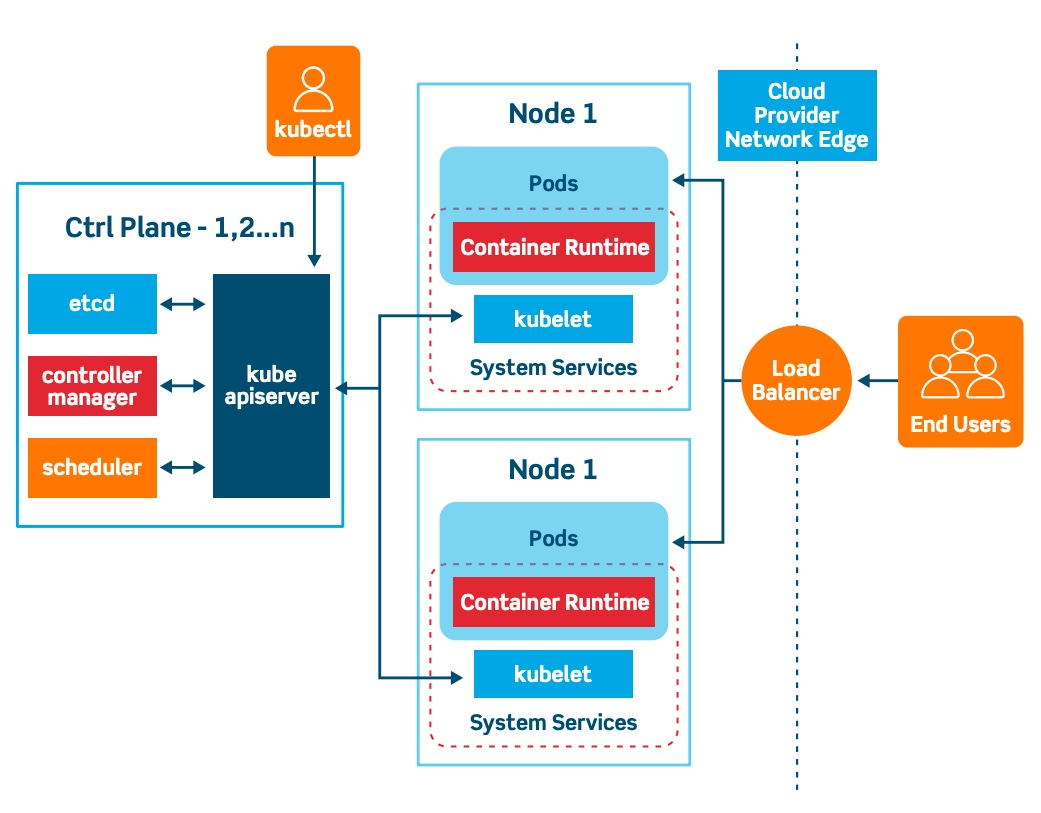

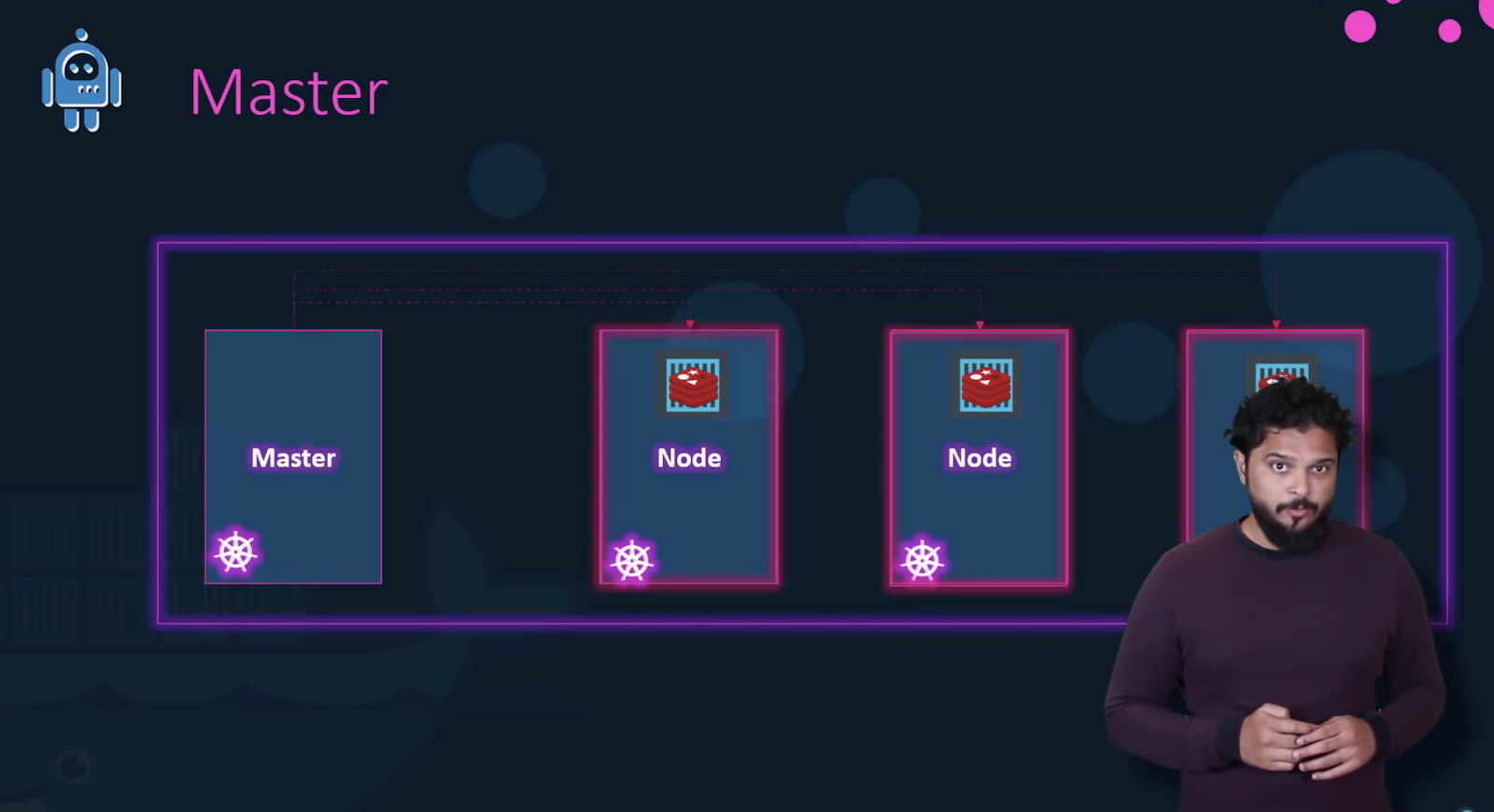

Kubernetes uses Docker host to host applications in the form of Docker containers. Well, it need not be Docker all the time. Kubernetes supports as native to Dockers as well such as rocket or a crier but let’s take a quick look at the Kubernetes architecture. A Kubernetes cluster consists of a set of nodes. Let us start with nodes. A node is a machine physical or virtual on which the Kubernetes software setup tools are installed. And node is a worker machine and that is where containers will be launched by Kubernetes. But what if the node on which the application is running fails. Well, obviously, our application goes down. So you need to have more than one node. A cluster is a set of nodes grouped together. This way, even if one node fails, you have your application still accessible from the other nodes. Now, we have a cluster but who is responsible for managing this cluster? Where is the information about the members of the cluster stored? How are the nodes monitored? When a node fails? How do you move the workload of the failed nodes to another worker node? That’s where the master comes in. The Master is a node with the Kubernetes control plane components installed.

The master watches over the nodes in the cluster and is responsible for the actual orchestration of containers on the worker nodes.

When you install Kubernetes on a system, you’re actually installing the following components, an API server, Etcd server, a Kubelet service, contain runtime, engine like Docker, and a bunch of controllers and the scheduler.

The API server acts as the front end for Kubernetes. The users, management devices, command-line interfaces, all talk to the API server to interact with the Kubernetes cluster. Next is the Etcd key-value store. The Etcd is a distributed reliable key-value store used by Kubernetes to store all data used to manage the cluster. Think of it this way, when you have multiple nodes and multiple masters in your cluster. Etcd stores all that information on all the nodes in the cluster in a distributed manner. Etcd is responsible for implementing logs within the cluster to ensure there are no conflicts between the masters. The scheduler is responsible for distributing work or containers across multiple nodes. It looks for newly created containers and assigns them to nodes. The controllers are the brain behind orchestration, they are responsible for noticing and responding when nodes containers or endpoints goes down. The controllers make decisions to bring up new containers in such cases.

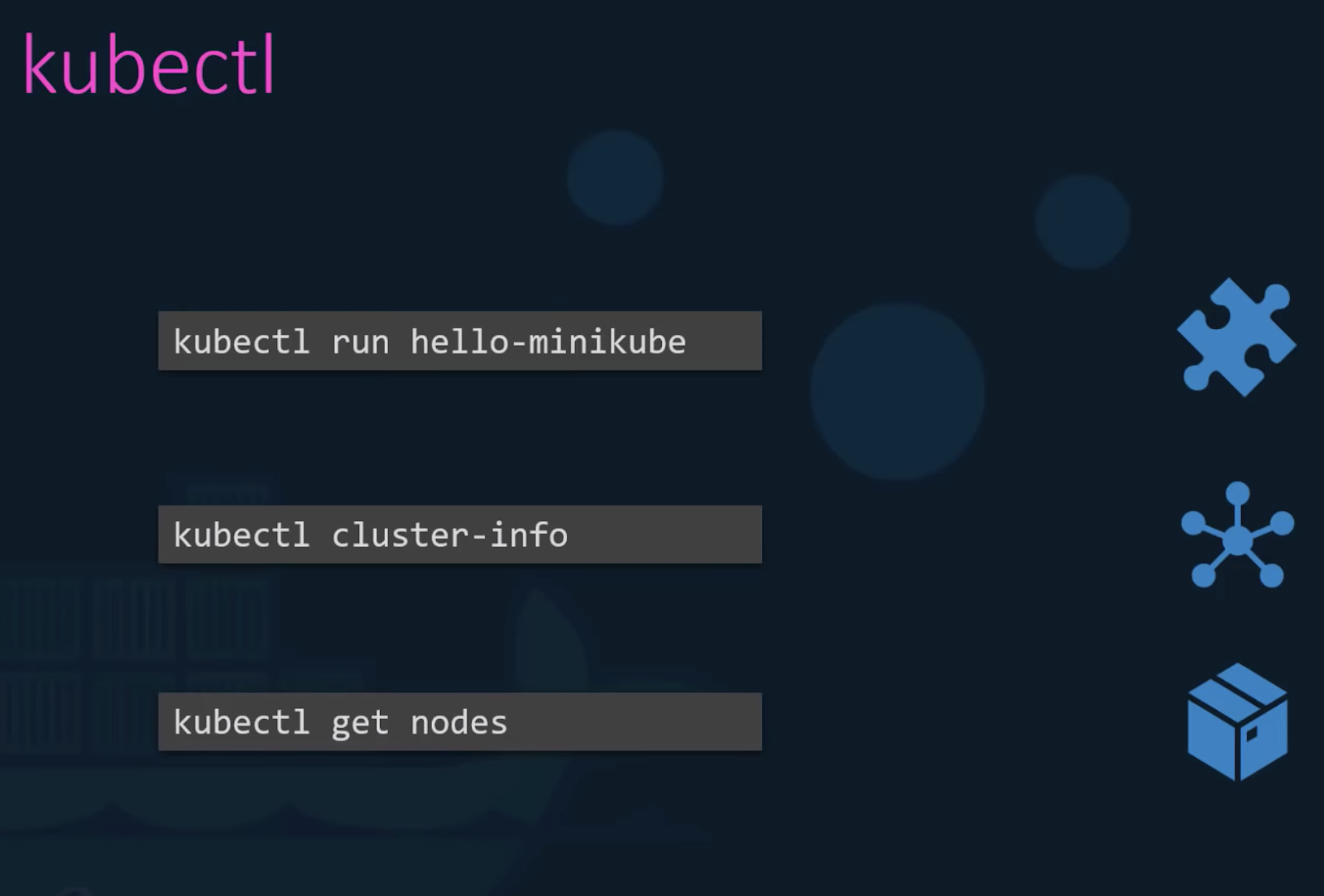

The container runtime is the underlying software that is used to run containers, In our case, it happens to be Docker. And finally, Kubelet is the agent that runs on each node in the cluster. The agent is responsible for making sure that the containers are running on the nodes as expected. And finally, we also need to learn a little bit about one of the command line utilities known as the Kube command-line tool or the Kube control tool or Kube cuddle as it is also called the Kube control tool is the Kubernetes CLI, which is used to deploy and manage applications on a Kubernetes cluster to get cluster related information, to get the status of the nodes in the cluster, and many other things. The kubectl run command is used to deploy an application on the cluster, the Kube control cluster info command is used to view information about the cluster. And the kubectl get nodes command is used to list all the nodes part of the cluster. So, to run hundreds of instances of your application across hundreds of nodes, all I need is a single Kubernetes command like this.

Well, that’s all we have for now. A quick introduction to Kubernetes and its architecture. We currently have four courses on KodeKloud on Kubernetes. That will take you from an absolute beginner to a certified expert. So have a look at it.

Responses